I’m going to take a page out of Richard Hirsch‘s playbook to try to reverse engineer what SAP must mean when it talks about “Zero Copy” in the context of SAP Business Data Cloud (BDC) data product usage, especially when it comes to Delta Share. At Mindset Consulting, LLC, we’ve noticed that when SAP uses these terms, it’s not particularly clear what it means. Sometimes these terms are beginning to be used in ways that appear to be contradictory. Unlike Richard, I tend to approach these questions theoretically, and not directly based on documentation, marketing, or public statements, much of which is contradictory anyway. I have ingested much of all of these types of information over the last week, and it informs this analysis, but the analysis is a step removed from that information. For that reason, you won’t see a ton of links below, and I also think it’s important to state this upfront so you have a better idea of where this analysis may go wrong. Now, to start us off, a couple of definitions!

Zero Copy: Meaning that data can be operated on without copying it. It’s important to note that there is no such thing as “zero copy” in the pure sense in computing. If you have data on your local disk and you want to display that data on your screen, the data is copied from your disk to RAM, then from RAM to your CPU registers, and from there is copied to your screen. A client-server example might be an SQL query on a database to get the top 10 customers from a sales database, where the database server does a bunch of processing (involving a lot of local copying), then sends the 10 record result set to the client. That word “sends” is doing a lot of work here. It’s another word for “copy”.

So, let’s just establish that when technologists say “zero copy”, what they actually mean is “minimal copying”. In other words, we are somewhere near the least amount of copying and data movement possible to achieve the task. Additionally, we usually privilege local copying over network copying, because local copying is usually (though not always) much faster, and also we tend to forget it’s happening (which can be dangerous).

Delta Share: A data sharing framework built by Databricks on top of the Delta Lake storage framework. Delta Lake basically consists of directories of Parquet files plus metadata on cloud object storage. Delta Share provides an API for allowing secure (sort of), performant (sort of) remote sharing of Delta Lake tables. How is this sharing achieved? By facilitating the high-bandwidth copying of the Delta Lake table to the client requesting it, after which the client can do whatever it needs to with the table contents. SAP has said that Delta Share will be supported by BDC.

To go back to our example, under Delta Share, if a client wants to find the top 10 customers from a sales table, the entire table is copied to the client (often multiple GBs, or TBs), which is then responsible for storing that data and doing whatever processing is necessary to come up with it’s 10-record answer. Additionally, the client can, since it has a copy of the table, give access to that data to anyone else or do other calculations on that data. Delta Share has mechanisms to limit access at a column and partition level, and to provide delta (change capture) updates to tables rather than having to re-transfer the entire table. But you get the idea of why I said “sort of” with regard to secure and performant sharing above.

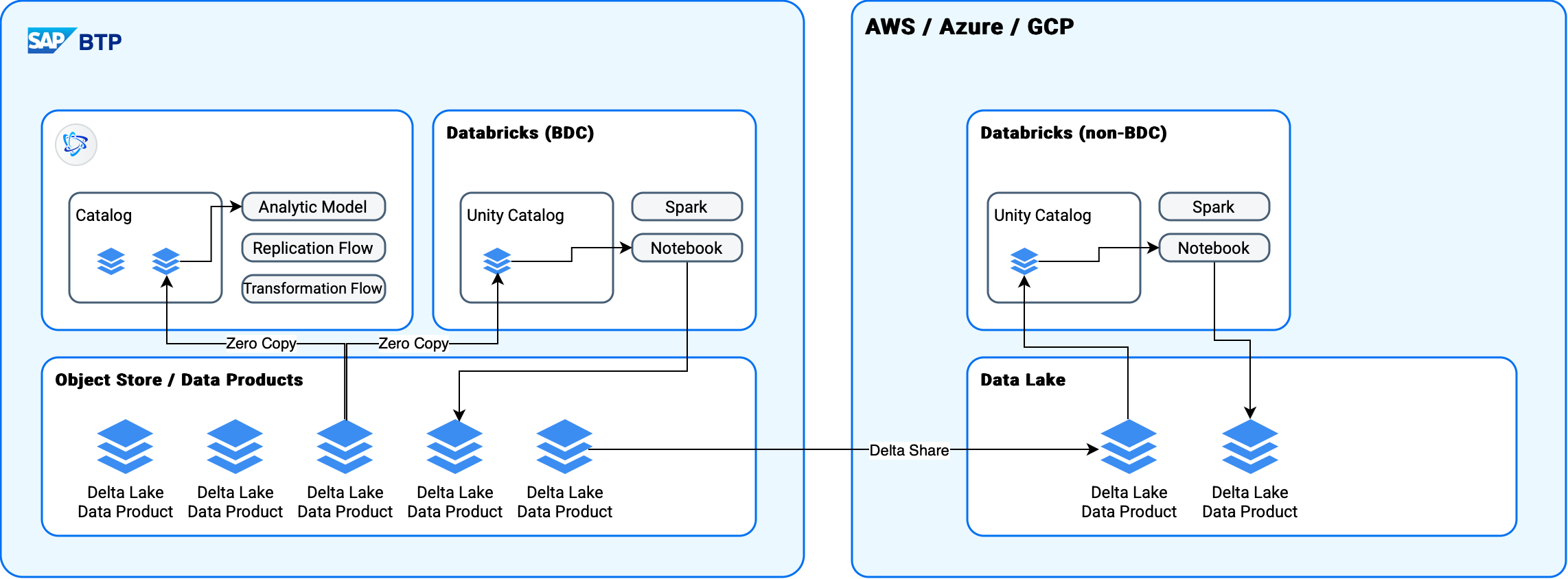

Now that we’ve got our definitions in place, what does this mean for SAP’s BDC? SAP BDC includes a version of Databricks hosted by SAP, and a lot of the discussion happening lately has been around the performance and data duplication of using Databricks on BDC data that is managed by Datasphere (SAP’s data-warehouse / -lake / -catalog product). Additionally, there are questions of what this looks like with non-BDC Databricks installations where the promise is that Delta Share will solve this problem. Well, I’ve got good news and bad news:

- For the BDC Databricks, I expect that we will see real “zero” (i.e. “minimal”) copy functionality based on the use of Delta Lake data products in a shared repository. In other words, I expect that Datasphere and Databricks will be accessing the very same files in the same repository. Of course, if Databricks (or Datasphere) are used to create a derivative, materialized data product that will result in duplication. In the case of Datasphere, the default mode of data product creation is view-based not materialized, which will not result in a copy, but if a transformation flow is used, we will see copying. In Databricks the default mode of data product creation is materialized, so we will still see a lot of copying. At least these new copies should be available to Datasphere without further copying.

- For the non-BDC Databricks, we will see Delta Share be used to facilitate access to data persisted in BDC. This is a copy of the original data product. Additionally, derivative data products created in Databricks will not, by default be available to BDC. They would have to be reimported (i.e. copied) into BDC using a replication flow, or possibly Delta Share if SAP starts supporting using BDC as a Delta Share client.

Now, there are some unknowns here, because SAP hasn’t shared the technical details of how the BDC Databricks will access data, nor additional details like access to view-based data products. Further, I’m assuming that Datasphere access to data products will work using the same mechanism as the Object Store processing framework. Here are the primary questions that I have not seen answered.

- Will the BDC Databricks really use a zero-copy approach to data access, or will it be set up as a Delta Share client under the covers, and copy the BDC data products to its own Data Lake? I expect that a zero-copy approach will be used, but it’s hard to say for sure.

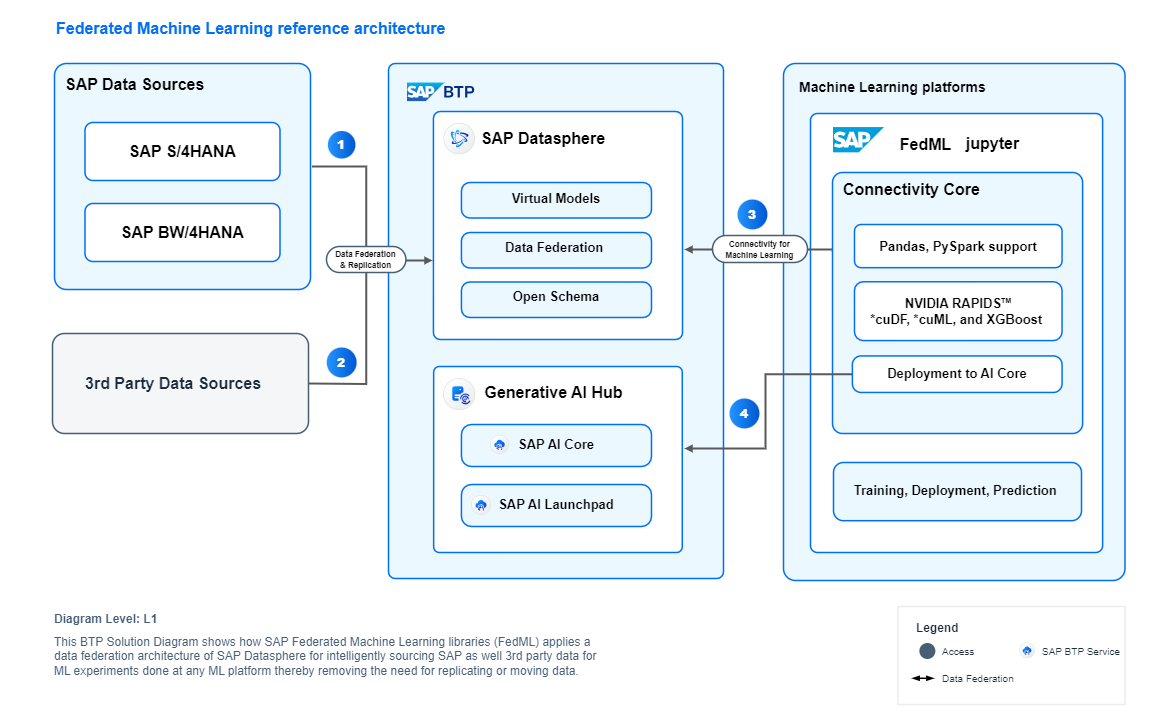

- Will the BDC Databricks be able to access and operate on view-based data products that are created in Datasphere, or only be able to access materialized data products persisted in the object store (which SAP is calling the foundation layer)? I suspect the later, at least initially, though I expect that SAP will offer something similar to FedML eventually, which allows for use of view-based data products in notebooks and Spark processes.

- Does replication of data product metadata between the Datasphere Catalog and Unity Catalog happen automatically or do we have to set up that replication on a per-data product basis?

- When a data product is updated, what is the transactional/locking story for both write-contention and read/write-contention? Related: Will Delta Share updates be supported?

This is what I’ll be keeping my eyes open for over the next couple months as BDC moves through initial roll-out and into general availability, and beyond.

If your company is working to understand SAP BTP, BDC, Datasphere, SAP Analytics Cloud, BW and how they fit into your future enterprise roadmap or integrate with non-SAP analytics infrastructure, we live for this stuff at Mindset Consulting, LLC. I hope you’ll come chat with us about it.